Working with data can be complex, and often the ‘right’ answer for the purpose is the result of a series of iterations where business subject matter experts (SMEs) and data professionals collaborate.

This is an iterative process by its very nature. Even with the best effort and available knowledge, the resulting data model will be subject to progressive understanding that is inherent in working with data.

In other words, the data solution model is not always something you always can get right in one go. In fact, it can take a long time for a model to stabilise, and in the current fast-paced environments this may even never be the case.

Choosing the right design patterns for your data solution helps maintain both the mindset and capability for the solution to keep evolving with the business, the technology, and to reduce technical debt on an ongoing basis.

This mindset also enables some truly fascinating opportunities such as the ability to maintain version control of the data model, the design metadata, and their relationship - to be able to represent the entire data solution as it was at a certain point in time - or to even allow different data models for different business domains.

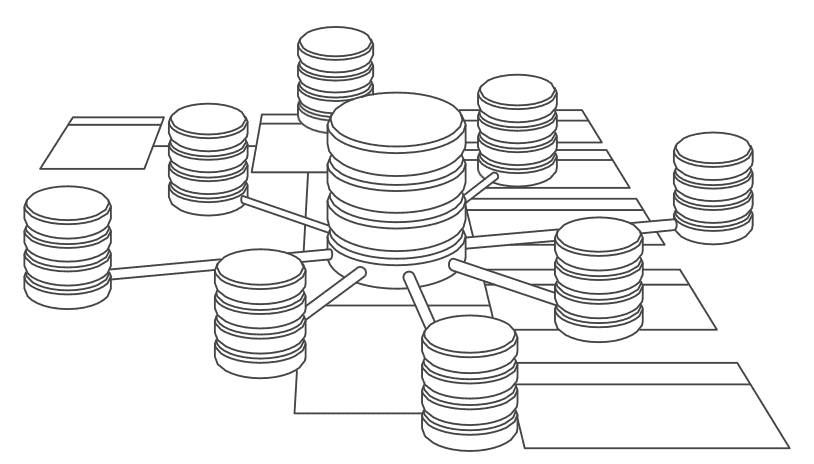

This idea, combined with the capability to automatically (re)deploy different structures and interpretations of data as well as the data logistics to populate or deliver these we call ‘Data Solution Virtualisation’.

The idea of an automated virtual data solution was conceived while working on improvements for generating Data Warehouse loading processes. It is, in a way, an evolution in ETL generation thinking. Combining Data Vault with a Persistent Staging Area (PSA) provides additional functionality because it allows the designer to refactor all, or parts, of the solution.

Being able to deliver a virtual data solution provides options. It does not mean you have to virtualise the entire solution, but you can pick-and-choose which approach works best for the given scenario and change technologies and models over time.

Coaching

Coaching  In-house

In-house